Your new post is loading...

Your new post is loading...

Intersting article by John Markoff on artificial neural networks and learning theorems and big data problems. Using an artificial intelligence technique inspired by theories about how the brain recognizes patterns, technology companies are reporting startling gains in fields as diverse as computer vision, speech recognition and the identification of new molecules... Click on the title to learn more.

If you are eager to understand and exploit the magnificent promise of big data, this post is for you. Alternatively, if you haven't really thought about big data yet, this is an early warning system. If you think that big data is the biggest news on the block and that the leading journalists, consultants and analysts can't possibly be wrong, here's an alternative viewpoint. For the record... JOnathan MacDonald offers a different take on Big Data.. Worth reading and thinking about.

Google is a company in which fact-based decision-making is part of the DNA and where Googlers (that is what Google calls its employees) speak the language of data as part of their culture. In Google the aim is that all decisions are based on data, analytics and scientific experimentation. This article over on Smart Data Collective gives a good insight into the evidence based decision processes they use. Interesting read. Click on the image or the title for info.

With initiatives like UN Pulse looking at using big data techniques for development Larry Irving discusses ideas for using mobile to change the world at the 2012 Social Good Summit. In 1999, half of the world had either never used a phone or had to travel more than two hours to reach the nearest one. Years later, mobile devices are being used in extremely innovative ways to connect and empower people around the world. Click on the image or title to learn more.

You name it, the government has a pile of data about it: genomics, energy use, the weather and more. Various open data and big data initiatives at the federal government aim to make this information available to anyone who wants it.

Found this interesting: MongoDB has native support for geospatial indexes and extensions to the query language to support a lot of different ways of querying your geo spatial documents. We will touch on a all of the available features of the MongoDB geospatial support point by point as including:

Query $near a point with a maximum distance around that point

Set the minimum and maximum range for the 2d space letting you map any data to the space

GeoNear command lets you return the distance from each point found

$within query lets you set a shape for you query letting you use a circle, box or arbitrary polygon, letting you map complex geo queries such as congressional districts or post code zones.

Click on the image or the title to learn more

|

Suggested by

Jed Fisher

|

Thanks to Jed for spotting this. Barry Devlin's blog offers a good insight into the emerging discusions around unified data management platforms that can scale from small to big data architectures. Barry's recent white paper on Data Zoo's (linked from article) expands on the points made. Both are worth a read. Click on the image or the title for more info.

If you (or are planning to) use HPCC for large scale data processing then Juan Negron has a great post on configuring an ubuntu cluster with HPCC using JuJu. He walks you through creating the JuJu charm and deplozing the cluster. if large scale data processing is your thing then its worth a read. Click on the image or title to learn more.

With the recent Hurrican /storm Sandy remdining us that mother nature rules, it has also serving as a teaching tool for companies whose business is big data. Gigaom has a good article covering various companies applying data analytics in the context of the storm. Click on the image or title to learn more.

Titan is the latest supercomputer to be deployed at Oak Ridge National Laboratory (ORNL), although it's technically a significant upgrade rather than a brand new installation. Jaguar, the supercomputer being upgraded, featured 18,688 compute nodes - each with a 12-core AMD Opteron CPU. Titan takes the Jaguar base, maintaining the same number of compute nodes, but moves to 16-core Opteron CPUs paired with an NVIDIA Kepler K20 GPU per node. The result is 18,688 CPUs and 18,688 GPUs, all networked together to make a supercomputer that should be capable of landing at or near the top of the TOP500 list. Click on the image or the title to learn more

Greenplum have open sourced their data collaboration platform Chorus as part of their commitment to OpenChorus (www.openchorus.org). Chrous is a facebook like environment for teams to collaborate around data. Licensed under the Apache 2.0 license - if you are building team tools around data processes OpenChorus is probably worth a look. Click on the image or the title to learn more.

eWeekEnterprises to Slowly Adopt Windows 8, Big Data Jobs to Grow: GartnereWeekBig data is also predicted to have a big impact on enterprises and the IT job market, with big data demand reaching 4.4 million jobs globally by 2014.

|

Suggested by

Jed Fisher

|

Techcrunch have an article explaining why investors are chasing the Big Data market and are likely to for some time. Splice Machine raised $4 million to develop its SQL Engine for big data apps. MongoHQ raised $6 million for its database as a service. A third startup, Bloomreach, announced $25 million in funding for its big data applications. These three companies provide examples for why the investor community will continue to invest in big data startups for many years to come. All reflect a changing dynamic — the rise of the big data app and the need for a new data infrastructure. These two converging trends now drive funding for a widening number of startups that make data functional inside and outside the enterprise. Data functionality, a term Gartner Research used in a report it published this week about how big data will drive $232 billion** in IT spending through 2016, speaks to why investment will continue to flow into companies such as Splice Machine, MongoHQ and Bloomreach. Click on the image or the title to learn more.

|

Interesting interview with Rajesh Janey, President, India & SAARC, EMC on EMC's big data strategy and its relevance to Indian ICT industry. Click on image or title to learn more.

Get into a cab and it's safe to assume the driver knows the ins, outs, shortcuts and potential traffic tie-ups between you and your destination. That kind of knowledge comes from years of experience, and IBM is taking a similar tact that blends real-time data and historical information into a new breed of traffic prediction. IBM is testing the new traffic-management technology in a pilot program in Lyon, France, that’s designed to provide the city’s transportation engineers with “real-time decision support” so they can proactively reduce congestion. Called Decision Support System Optimizer (DSSO), the technology uses IBM’s Data Expansion Algorithm to combine old and new data to predict future traffic flow. Over time the system “learns” from successful outcomes to fine-tune future recommendations. Click on the title or image to learn more.

Sometimes the people we work with are biologists, systems scientists, engineers, analysts, ecologists, economists etc. When ever we explain what we do the common pattern is one part data, one part data analysis, one part simulation, one part data visualization and one part subject matter expertise. All parts are essential in finding solutions to complex problems but sometimes i stumble explaining who uses our tools and how come they are in lots of different professions. DO we build tools for animators, biologists, engineers, analysts. system scientists? Well actually all of them -but to explain why eloquently, i should have simply asked IBM's James Kobielus James points out that all these folks are investigators and now have a new name - data scientists. Short video and worth a watch.

Government agencies are collecting vast amounts of data, but they're struggling just to store it, let alone analyze it to improve efficiency, accuracy and forecasts. On average, government agencies store 1.61 petabytes of data, but expect to be storing 2.63 petabytes within the next two years. These data include: reports from other government agencies at various levels, reports generated by field staff, transactional business data, scientific research, imagery/video, Web interaction data and reports filed by non-government agencies. While government agencies collect massive amounts of data, MeriTalk's report found that only 60 percent of IT professionals say their agency analyzes the data collected, and less than 40 percent say their agencies use the data to make strategic decisions. That includes U.S. Department of Defense and intelligence agencies, which on average are even farther behind than civilian agencies when it comes to Big Data. While 60 percent of civilian agencies are exploring how Big Data could be brought to bear on their work, only 42 percent of DoD/intel agencies are doing the same. Click on the image or the title to learn more.

Results from Kalido survey in London – Big Data Analytics 2012. Key take aways: Only 15% had a Big Data Analytics project underway and another 37% planned to do something within 1 year. However, 26% have no timeline in place. This maps with our internal surveys where we are seeing companies with a lot of machine based data (log files, call data records and the like) looking at the technologies while general business is adopting a wait and see how this thing matures, pans out and is relevant. Click on the image or the title to learn more.

Read Accenture Federal Services’ summary report and learn how our federal analytics helps accelerate cost reductions while meeting agency needs.

Vincent Huang has a great set of posts at Ericsson labs on preserving privacy in big data analytics. Over the last few years there has been a growing trend in the international development world to highlight the use of big data for development. Using mobile telephony call records, twitter postings etc as raw data sets that can be mined for indicators of social circumstances and systemic change. A key first step in this process is anonymizing the data so individuals cannot be easily identified from distilled results. Vincent's work discusses the requirements and algorithms used and is both a fascinating and essential read for anyone working in this area. Must read posts if you are involved in big data for development or working with private individual identifiable data sets. Click on the image or title to learn more.

Over the last year we have been experimenting with different data processing platforms including Hadoop, HPCC, several graph databases and NOSQL databases. WE selected a hybrid of STORM and HPCC since combined they are a great match to our processing needs, both have permissive open source licenses and good resources. Many Big data articles fail to mention that Big Data applications have varying requirements and the different available toolsets suit different applications and circumstances. One of the reasons we went with HPCC is we have good C++ skills on our team, we liked the ECL language and systems architecture. We could see how pur target workflows would map (it helps that the Lexis Nexis team have addressed similar areas int he past and it shows in the product) and we could easily see how to integrate the HPCC functionality with our C++, Java and Python tools. If we were a pure java only house then HADOOP,CASCADE and HIVE would be a much better match for non real time procesing. Tools like Storm are better architected for real time event processing (hence our hybrid choice). One of the essential lessons and take aways is that each domain and circumstance has different requirements. You have to play with the technologies, test them against workflows and see what works for your problem domain, performance requirements and team skills. For evaluating HPCC a great resource is Arjuna's blog. You will find worked examples discussion of trade offs and approaches and a lot of good information. If you are interested in learning about a great Big Data platform - its a good place to start. Click on the image or the title to learn more.

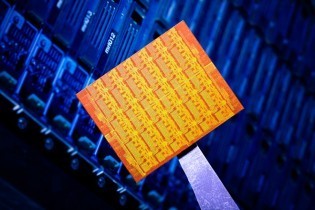

Wired has an interesting article on mobile computing. As ARM seeks to put cell phone chips into our supercomputers, Intel is doing the reverse. The lines between the mobile hardware and data center hardware are blurring. Intel’s experimental Single-chip Cloud Computer project, or SCC is a 48 core chip acts as a “network” of processors on a single chip, with two cores per node. The nodes actually communicate to each other much the same way nodes in a cluster in a data center would. While these types of architectures are today used in big data applications that run on a server cloud environment there are actually many cases where a hybrid model would make the most sense including artificial intelligence, machine vision and augmented reality applications. Click on the image or the title to learn more.

There are a bunch of cloud and distributed computing blogs worth reading including: Simon Wardley – Bits or pieces?

Ben Kepes – Diversity Limited

David Linthicum – The Infoworld Cloud Computing Blog

Krishnan Subramanian – CloudAve But the one i keep coming back to is Werner Vogels - All things distributed. Its good for insight and also for some great links to essential papers by Jim Greay that are deeply relevent to distributed computing developments.Certainly worth reading.

I am not usually a fan of market analysts and especially market reports that report X market worth zigabillions with no qualification to market sizing methodology, categorization, data source or actual product adoption. However john Rymer is a Forrester analyst who has a rather good "crabby old guy" rant on big data. Read the rant its well worth noting - as is the closing lines: "My hope is that we start zeroing in on the scenarios and the value to enterprises of the various big data solutions that address each scenario. By doing so, we'll unlock the value of big data a lot faster. " Could not agree more. Click on the image or title to learn more.

Interesting article on CMS wire regarding insight and big data. What is the Big Data Fallacy? Data does provide information, and more data generally gives more information. However, the fallacy of big data is that more data doesn’t lead to proportionately more information. In fact, the more data you have, the less information you gain as a proportion of the data. The return on extractable information from any amount of big data asymptotically diminishes as your data volume increases. In nearly all realistic data sets (especially big data), the amount of information one can extract will be a very tiny fraction of the data volume: information << data. What about insights? How does that relate to information and data? All insights are information, but not all information provides insight. In order to provide insight, information must be: Interpretable, Relevant, Novel. Click on the image or the title to learn more.

|

Your new post is loading...

Your new post is loading...

Your new post is loading...

Your new post is loading...