Your new post is loading...

Your new post is loading...

A recently introduced approach termed ``Assembly Theory", featuring a computable index based on basic principles of statistical compression has been claimed to be a novel and superior approach to classifying and distinguishing living from non-living systems and the complexity of molecular biosignatures. Here, we demonstrate that the assembly pathway method underlying this index is a suboptimal restricted version of Huffman's encoding (Fano-type), widely adopted in computer science in the 1950s, that is comparable (or inferior) to other popular statistical compression schemes. We show how simple modular instructions can mislead the assembly index, leading to failure to capture subtleties beyond trivial statistical properties that are pervasive in biological systems. We present cases whose low complexities can arbitrarily diverge from the random-like appearance to which the assembly pathway method would assign arbitrarily high statistical significance, and show that it fails in simple cases (synthetic or natural). Our theoretical and empirical results imply that the assembly index, whose computable nature we show is not an advantage, does not offer any substantial advantage over existing concepts and methods. Alternatives are discussed.

|

Rescooped by

Hector Zenil

from Papers

|

We show that if evolution is algorithmic in any form and can thus be considered a program in software space, the emergence of a natural algorithmic probability distribution has the potential to become an accelerating mechanism. We simulate the application of algorithmic mutations to binary matrices based on numerical approximations to algorithmic probability, comparing the evolutionary speed to the alternative hypothesis of uniformly distributed mutations for a series of matrices of varying complexity. When the algorithmic mutation produces unfit organisms---because mutations may lead to, for example, syntactically useless evolutionary programs---massive extinctions may occur. We show that modularity provides an evolutionary advantage also evolving a genetic memory. We demonstrate that such regular structures are preserved and carried on when they first occur and can also lead to an accelerated production of diversity and extinction, possibly explaining natural phenomena such as periods of accelerated growth of the number of species (e.g. the Cambrian explosion) and the occurrence of massive extinctions (e.g. the End Triassic) whose causes are a matter of considerable debate. The approach introduced here appears to be a better approximation to actual biological evolution than models based upon the application of mutation from uniform probability distributions, and because evolution by algorithmic probability converges faster to regular structures (both artificial and natural, as tested on a small biological network), it also approaches a formal version of open-ended evolution based on previous results. The results validate the motivations and results of Chaitin's Metabiology programme. We also show that the procedure has the potential to significantly accelerate solving optimization problems in the context of artificial evolutionary algorithms. Algorithmically probable mutations reproduce aspects of evolution such as convergence rate, genetic memory, modularity, diversity explosions, and mass extinction Santiago Hernández-Orozco, Hector Zenil, Narsis A. Kiani Click here to edit the content

Via Complexity Digest

The 25th International Workshop on Cellular Automata and Discrete Complex Systems AUTOMATA 2019 will take place on June 26-28 in Guadalajara, Mexico. Proceedings Accepted full papers will appear in the proceedings published by Springer in the Lecture Notes in Computer Science series. About the Conference AUTOMATA 2019 is the twenty-fifth workshop in a series of events established in 1995. These workshops aim: To establish and maintain a permanent, international, multidisciplinary forum for the collaboration of researchers in the field of Cellular Automata (CA) and Discrete Complex Systems (DCS). To provide a platform for presenting and discussing new ideas and results. To support the development of theory and applications of CA and DCS (e.g. parallel computing, physics, biology, social sciences, and others) as long as fundamental aspects and their relations are concerned. To identify and study within an inter- and multidisciplinary context, the important fundamental aspects, concepts, notions and problems concerning CA and DCS.

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

New paper sheds light on the pervasiveness of Turing universality by showing a series of behavioural boundary crossing results, including emulations (for all initial conditions) of Wolfram class 2 Elementary Cellular Automata (ECA) by Class 1 ECA, emulations of Classes 1, 2 and 3 ECA by Class 2 and 3 ECA, and of Classes 1, 2 and 3 by Class 3 ECA, along with results of even greater emulability for general CA (neighbourhood r = 3/2), including Class 1 CA emulating Classes 2 and 3, and Classes 3 and 4 emulating all other classes (1, 2, 3 and 4). The emulations occur with only a linear overhead and can be considered computationally efficient. The paper also introduces a concept of emulation networks, deriving a topologically-based measure of complexity based upon out- and in-degree connectivity establishing bridges to fundamental ideas of complexity, universality, causality and dynamical systems.

Via Complexity Digest

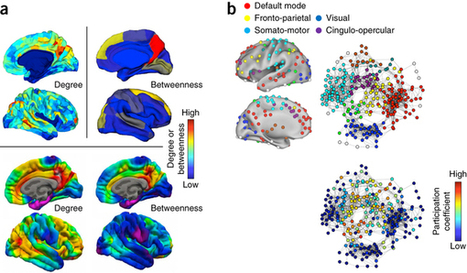

The confluence of new approaches in recording patterns of brain connectivity and quantitative analytic tools from network science has opened new avenues toward understanding the organization and function of brain networks. Descriptive network models of brain structural and functional connectivity have made several important contributions; for example, in the mapping of putative network hubs and network communities. Building on the importance of anatomical and functional interactions, network models have provided insight into the basic structures and mechanisms that enable integrative neural processes. Network models have also been instrumental in understanding the role of structural brain networks in generating spatially and temporally organized brain activity. Despite these contributions, network models are subject to limitations in methodology and interpretation, and they face many challenges as brain connectivity data sets continue to increase in detail and complexity. Contributions and challenges for network models in cognitive neuroscience

• Olaf Sporns

Nature Neuroscience (2014) http://dx.doi.org/10.1038/nn.3690

Via Complexity Digest, Shady El Damaty

|

Rescooped by

Hector Zenil

from Papers

|

We study the asymptotic behaviour of symbolic computing systems, notably one-dimensional cellular automata (CA), in order to ascertain whether and at what rate the number of complex versus simple rules dominate the rule space for increasing neighbourhood range and number of symbols (or colours), and how different behaviour is distributed in the spaces of different cellular automata formalisms. Using two different measures, Shannon's block entropy and Kolmogorov complexity, the latter approximated by two different methods (lossless compressibility and block decomposition), we arrive at the same trend of larger complex behavioural fractions. We also advance a notion of asymptotic and limit behaviour for individual rules, both over initial conditions and runtimes, and we provide a formalisation of Wolfram's classification as a limit function in terms of Kolmogorov complexity. Asymptotic Behaviour and Ratios of Complexity in Cellular Automata Hector Zenil, Elena Villarreal-Zapata http://arxiv.org/abs/1304.2816

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

Humans are sensitive to complexity and regularity in patterns (Falk & Konold, 1997; Yamada, Kawabe, & Miyazaki, 2013). The subjective perception of pattern complexity is correlated to algorithmic (or Kolmogorov-Chaitin) complexity as defined in computer science (Li & Vitányi, 2008), but also to the frequency of naturally occurring patterns (Hsu, Griffiths, & Schreiber, 2010). However, the possible mediational role of natural frequencies in the perception of algorithmic complexity remains unclear. Here we reanalyze Hsu et al. (2010) through a mediational analysis, and complement their results in a new experiment. We conclude that human perception of complexity seems partly shaped by natural scenes statistics, thereby establishing a link between the perception of complexity and the effect of natural scene statistics.

Natural scene statistics mediate the perception of image complexity

Nicolas Gauvrit, Fernando Soler-Toscano & Hector Zenil

Visual Cognition

http://dx.doi.org/10.1080/13506285.2014.950365

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

We survey concepts at the frontier of research connecting artificial, animal and human cognition to computation and information processing---from the Turing test to Searle's Chinese Room argument, from Integrated Information Theory to computational and algorithmic complexity. We start by arguing that passing the Turing test is a trivial computational problem and that its pragmatic difficulty sheds light on the computational nature of the human mind more than it does on the challenge of artificial intelligence. We then review our proposed algorithmic information-theoretic measures for quantifying and characterizing cognition in various forms. These are capable of accounting for known biases in human behavior, thus vindicating a computational algorithmic view of cognition as first suggested by Turing, but this time rooted in the concept of algorithmic probability, which in turn is based on computational universality while being independent of computational model, and which has the virtue of being predictive and testable as a model theory of cognitive behavior.

The Information-theoretic and Algorithmic Approach to Human, Animal and Artificial Cognition

Nicolas Gauvrit, Hector Zenil, Jesper Tegnér http://arxiv.org/abs/1501.04242

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

In the past decades many definitions of complexity have been proposed. Most of these definitions are based either on Shannon's information theory or on Kolmogorov complexity; these two are often compared, but very few studies integrate the two ideas. In this article we introduce a new measure of complexity that builds on both of these theories. As a demonstration of the concept, the technique is applied to elementary cellular automata and simulations of the self-organization of porphyrin molecules.

Complexity Measurement Based on Information Theory and Kolmogorov Complexity

Leong Ting Lui, Germán Terrazas, Hector Zenil, Cameron Alexander, Natalio Krasnogor

Artificial Life Spring 2015, Vol. 21, No. 2: 205–224. http://dx.doi.org/10.1162/ARTL_a_00157

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

Can we quantify the change of complexity throughout evolutionary processes? We attempt to address this question through an empirical approach. In very general terms, we simulate two simple organisms on a computer that compete over limited available resources. We implement Global Rules that determine the interaction between two Elementary Cellular Automata on the same grid. Global Rules change the complexity of the state evolution output which suggests that some complexity is intrinsic to the interaction rules themselves. The largest increases in complexity occurred when the interacting elementary rules had very little complexity, suggesting that they are able to accept complexity through interaction only. We also found that some Class 3 or 4 CA rules are more fragile than others to Global Rules, while others are more robust, hence suggesting some intrinsic properties of the rules independent of the Global Rule choice. We provide statistical mappings of Elementary Cellular Automata exposed to Global Rules and different initial conditions onto different complexity classes.

Interacting Behavior and Emerging Complexity

Alyssa Adams, Hector Zenil, Eduardo Hermo Reyes, Joost Joosten http://arxiv.org/abs/1512.07450

Via Complexity Digest

In evolutionary biology, attention to the relationship between stochastic organisms and their stochastic environments has leaned towards the adaptability and learning capabilities of the organisms rather than toward the properties of the environment. This article is devoted to the algorithmic aspects of the environment and its interaction with living organisms. We ask whether one may use the fact of the existence of life to establish how far nature is removed from algorithmic randomness. The paper uses a novel approach to behavioral evolutionary questions, using tools drawn from information theory, algorithmic complexity and the thermodynamics of computation to support an intuitive assumption about the near optimal structure of a physical environment that would prove conducive to the evolution and survival of organisms, and sketches the potential of these tools, at present alien to biology, that could be used in the future to address different and deeper questions. We contribute to the discussion of the algorithmic structure of natural environments and provide statistical and computational arguments for the intuitive claim that living systems would not be able to survive in completely unpredictable environments, even if adaptable and equipped with storage and learning capabilities by natural selection (brain memory or DNA). Zenil, Hector; Gershenson, Carlos; Marshall, James A.R.; Rosenblueth, David A. 2012. "Life as Thermodynamic Evidence of Algorithmic Structure in Natural Environments." Entropy 14, no. 11: 2173-2191. http://www.mdpi.com/1099-4300/14/11/2173

Via Complexity Digest, FuturICT

|

Rescooped by

Hector Zenil

from Papers

|

In a previous article, I suggested a method for testing the algorithmicity of a natural/physical process using the concept of Levin's universal distribution. Here, I explain this method in the context of the problem formulated by L. Floridi concerning the testability of pancomputationalism. Then, I will introduce a behavioural battery of programmability tests for natural computation, as an example of a computational philosophy approach. That is to tackle a simplified version of a complex philosophical question with a computer experiment. I go on to demonstrate the application of this novel approach in a case study featuring Conway's Game of Life. In this context, I also briefly discuss another question raised by Floridi, concerning a grand unified theory of information, which I think is deeply connected to the grand unification of physics. Algorithmicity and programmability in natural computing with the Game of Life as in silico case study

Hector Zenil

Journal of Experimental & Theoretical Artificial Intelligence

http://dx.doi.org/10.1080/0952813X.2014.940686

Via Complexity Digest

|

Most machine learning approaches extract statistical features from data, rather than the underlying causal mechanisms. A different approach analyses information in a general way by extracting recursive patterns from data using generative models under the paradigm of computability and algorithmic information theory.

Complex behaviour emerges from interactions between objects produced by different generating mechanisms. Yet to decode their causal origin(s) from observations remains one of the most fundamental challenges in science. This paper introduces a universal, unsupervised and parameter-free model-oriented approach, based on the seminal concept and the first principles of algorithmic probability, to decompose an observation into its most likely algorithmic generative models.

|

Rescooped by

Hector Zenil

from Papers

|

The slime mould Physarum polycephalum has been used in developing unconventional computing devices for in which the slime mould played a role of a sensing, actuating, and computing device. These devices treated the slime mould rather as an active living substrate yet the slime mould is a self-consistent living creature which evolved for millions of years and occupied most part of the world, but in any case, that living entity did not own true cognition, just automated biochemical mechanisms. To "rehabilitate" the slime mould from the rank of a purely living electronics element to a "creature of thoughts" we are analyzing the cognitive potential of P. polycephalum. We base our theory of minimal cognition of the slime mould on a bottom-up approach, from the biological and biophysical nature of the slime mould and its regulatory systems using frameworks suh as Lyon's biogenic cognition, Muller, di Primio-Lengeler\'s modifiable pathways, Bateson's "patterns that connect" framework, Maturana's autopoetic network, or proto-consciousness and Morgan's Canon. Slime mould: the fundamental mechanisms of cognition

Jordi Vallverdu, Oscar Castro, Richard Mayne, Max Talanov, Michael Levin, Frantisek Baluska, Yukio Gunji, Audrey Dussutour, Hector Zenil, Andrew Adamatzky

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

New Turing-universality results in Elementary Cellular Automata in recent published paper: "Rule Primality, Minimal Generating Sets and Turing-Universality in the Causal Decomposition of Elementary Cellular Automata" by Jürgen Riedel and Hector Zenil New paper discovers and proves new universality results in ECA, namely, that the Boolean composition of ECA rules 51 and 118, and 170, 15 and 118 can emulate ECA rule 110 and are thus Turing-universal coupled systems. It is introduced a 4-colour Turing-universal cellular automaton that carries the Boolean composition of 2 and 3 ECA rules emulating ECA rule 110 under multi-scale coarse-graining. They find that rules generating the ECA rulespace by Boolean composition are of low complexity and comprise prime rules implementing basic operations that when composed enable complex behaviour. They also found a candidate minimal set with only 38 ECA prime rules — and several other small sets — capable of generating all other (non-trivially symmetric) 88 ECA rules under Boolean composition.

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

A paper recently published in the Philosophical Transactions of the Royal Society A under the title “A probabilistic framework for identifying biosignatures using Pathway Complexity" claims to offer a revolutionary measure potentially capable of distinguishing life from non-life and even discerning life on other planets by finding biosignatures. The method proposed by its authors consists roughly in finding the generative grammar behind an object and then counting the number of steps needed to generate said object from its compressed form. Unfortunately, this does not amount to a new measure of complexity. The first part of the algorithm is mostly a description of Huffman's coding algorithm (see ref. below) and represents the way in which most popular lossless compression algorithms are implemented: finding the building blocks that can best compress a string by minimising redundancies, decomposing it into the statistically smallest collection of components that reproduce it without loss of information by traversing the string and finding repetitions.

Via Complexity Digest

Most metro (or subway) systems are real-life examples of complex networks with different properties. This Demonstration shows the networks of 33 of the largest metro systems in the world with graph-theoretic and visual information. Node size and edge color are depicted following a heat map determined by the degree of nodes and the centrality of edges; the larger a node, the greater the link degree, and the closer to red, the more central an edge. You can select different graph embeddings to highlight different properties of the networks. Only connecting or terminal stations are considered. Some discrepancies with current systems might exist (for example, the Shanghai metro has substantially increased recently).

|

Rescooped by

Hector Zenil

from Papers

|

This paper examines the claim that cellular automata (CA) belonging to Class III (in Wolfram's classification) are capable of (Turing universal) computation. We explore some chaotic CA (believed to belong to Class III) reported over the course of the CA history, that may be candidates for universal computation, hence spurring the discussion on Turing universality on both Wolfram's classes III and IV. Computation and Universality: Class IV versus Class III Cellular Automata Genaro J. Martinez, Juan C. Seck-Tuoh-Mora, Hector Zenil http://arxiv.org/abs/1304.1242

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

Kolmogorov-Chaitin complexity has long been believed to be unapproachable when it comes to short sequences (e.g. of length 5-50). However, with the newly developed coding theorem method the complexity of strings of length 2-11 can now be numerically estimated. We present the theoretical basis of algorithmic complexity for short strings (ACSS) and describe an R-package providing functions based on ACSS that will cover psychologists' needs and improve upon previous methods in three ways: (1) ACSS is now available not only for binary strings, but for strings based on up to 9 different symbols, (2) ACSS no longer requires time-consuming computing, and (3) a new approach based on ACSS gives access to an estimation of the complexity of strings of any length. Finally, three illustrative examples show how these tools can be applied to psychology.

Algorithmic complexity for psychology: A user-friendly implementation of the coding theorem method

Nicolas Gauvrit, Henrik Singmann, Fernando Soler-Toscano, Hector Zenil http://arxiv.org/abs/1409.4080

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

We explore the reprogramming capabilities of computer programs using cellular automata (CA). We show a series of boundary crossing results, including a Wolfram Class 1 Elementary Cellular Automaton (ECA) emulating a Class 2 ECA, a Class 2 ECA emulating a Class 3 ECA, and a Class 3 ECA emulating a Class 2 ECA, along with results of a similar type for general CA (neighbourhood range r=3/2), including a Class 1 CA emulating a Class 3 CA, Classes 3 and 4 CAs emulating Class 4 CAs, and Class 4 emulating Class 3 CAs. All these emulations occur with only a constant overhead, and hence are computationally efficient. By constructing emulation networks through an exhaustive search in the compiler space, we show that topological properties determining emulation direction, such as ingoing and outgoing hub degrees, suggest a topological classification of class 4 behaviour consistent with Turing universality conjectures. We also found that no hacking strategy based on compiler complexity or compiler similarity is suggested. We also introduce a definition of prime rules applicable to CAs-- analogous to that of prime numbers--according to which CA rules act as basic constructors of all other rules. We show a Turing universality result of a composition of ECA rules emulating rule 110. The approach yields a novel perspective on complexity, controllability, causality, and reprogrammability of even the simplest computer programs providing strong evidence that computation universality is ubiquitous. The results suggest that complexity is, or can be, generally driven by initial conditions, and are therefore in this sense more fundamental than even the underlying rules that we show can asymptotically carry any desired computation.

Cross-boundary Behavioural Reprogrammability of Cellular Automata from Emulation Networks

Jürgen Riedel, Hector Zenil http://arxiv.org/abs/1510.01671

Via Complexity Digest

|

Rescooped by

Hector Zenil

from Papers

|

Complexity measures are designed to capture complex behavior and quantify *how* complex, according to that measure, that particular behavior is. It can be expected that different complexity measures from possibly entirely different fields are related to each other in a non-trivial fashion. Here we study small Turing machines (TMs) with two symbols, and two and three states. For any particular such machine τ and any particular input x we consider what we call the 'space-time' diagram which is the collection of consecutive tape configurations of the computation τ(x). In our setting, we define fractal dimension of a Turing machine as the limiting fractal dimension of the corresponding space-time diagram. It turns out that there is a very strong relation between the fractal dimension of a Turing machine of the above-specified type and its runtime complexity. In particular, a TM with three states and two colors runs in at most linear time iff its dimension is 2, and its dimension is 1 iff it runs in super-polynomial time and it uses polynomial space. If a TM runs in time O(x^n) we have empirically verified that the corresponding dimension is (n+1)/n, a result that we can only partially prove. We find the results presented here remarkable because they relate two completely different complexity measures: the geometrical fractal dimension on the one side versus the time complexity of a computation on the other side. Fractal dimension versus computational complexity

Joost J. Joosten, Fernando Soler-Toscano, Hector Zenil http://arxiv.org/abs/1309.1779

Via Complexity Digest

A Computable Universe

Understanding and Exploring Nature as Computation

Edited by: Hector Zenil

This volume, with a foreword by Sir Roger Penrose, discusses the foundations of computation in relation to nature.

It focuses on two main questions: What is computation?

How does nature compute?

The contributors are world-renowned experts who have helped shape a cutting-edge computational understanding of the universe. They discuss computation in the world from a variety of perspectives, ranging from foundational concepts to pragmatic models to ontological conceptions and philosophical implications. The volume provides a state-of-the-art collection of technical papers and non-technical essays, representing a field that assumes information and computation to be key in understanding and explaining the basic structure underpinning physical reality. It also includes a new edition of Konrad Zuse's “Calculating Space” (the MIT translation), and a panel discussion transcription on the topic, featuring worldwide experts in quantum mechanics, physics, cognition, computation and algorithmic complexity. The volume is dedicated to the memory of Alan M Turing — the inventor of universal computation, on the 100th anniversary of his birth, and is part of the Turing Centenary celebrations. http://www.worldscientific.com/worldscibooks/10.1142/8306

Via Complexity Digest, Shady El Damaty

"My research focuses on applying information theory and complexity science to genomics, synthetic and network biology. With backgrounds in math, computer science and philosophy, I think of myself as a kind ofexperimental philosopher or a computational natural scientist. (Greg Chaitin once referred to me as a "new kind of practical theoretician")."

Via Colbert Sesanker

|

Rescooped by

Hector Zenil

from Papers

|

The evaluation of the complexity of finite sequences is key in many areas of science. For example, the notions of structure, simplicity and randomness are common currency in biological systems epitomized by a sequence of fundamental nature and utmost importance: the DNA. Nevertheless, researchers have for a long time avoided any practical use of the current accepted mathematical theory of randomness, mainly because it has been considered to be useless in practice [8]. Despite this belief, related notions such as lossless uncompressibility tests have proven relative success, in areas such as sequence pattern detection [21] and have motivated distance measures and classification methods [9] in several areas (see [19] for a survey), to mention but two examples among many others of even more practical use. The method presented in this paper aims to provide sound directions to explore the feasibility and stability of the evaluation of the complexity of strings by means different to that of lossless compressibility, particularly useful for short strings. The authors known of only two similar attempts to compute the uncomputable, one related to the estimation of a Chaitin Omega number [4], and of another seminal related measure of complexity, Bennett's Logical Depth [23], [27]. This paper provides an approximation to the output frequency distribution of all Turing machines with 5 states and 2 symbols which in turn allow us to apply a central theorem in the theory of algorithmic complexity based in the notion of algorithmic probability (also known as Solomonoff's theory of inductive inference) that relates frequency of production of a string and its Kolmogorov complexity hence providing, upon application of the theorem, numerical estimations of Kolmogorov complexity by a method different to lossless compression algorithms. Soler-Toscano F, Zenil H, Delahaye J-P, Gauvrit N (2014) Calculating Kolmogorov Complexity from the Output Frequency Distributions of Small Turing Machines. PLoS ONE 9(5): e96223. http://dx.doi.org/10.1371/journal.pone.0096223

Via Complexity Digest

|

Your new post is loading...

Your new post is loading...

Your new post is loading...

Your new post is loading...